Overview Population Based Training (PBT)

Population-Based Training (PBT) is a technique for efficiently searching and adapting hyperparameters during reinforcement learning.

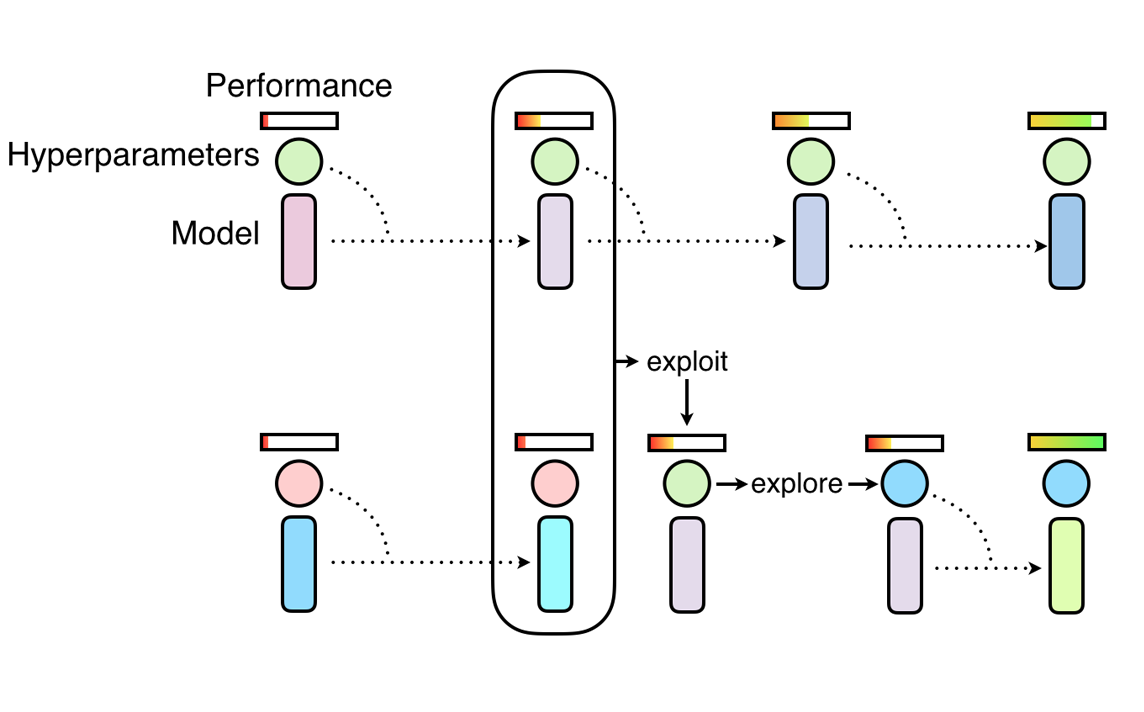

Instead of running a fixed hyperparameter search, PBT trains a population of models in parallel, each initialized with different random hyperparameters. Over time, the population evolves by exploiting information from the best-performing models and exploring new hyperparameter variations.

PBT is inspired by genetic algorithms:

-

Exploit: Poorly performing models adopt the weights and hyperparameters of stronger performers.

-

Explore: These cloned hyperparameters are then slightly perturbed to introduce variation.

This cycle of exploit → explore continues throughout training, allowing the population to adapt dynamically rather than relying on a fixed hyperparameter schedule. As the training of the population of neural networks progresses, this process of exploiting and exploring is performed periodically, ensuring that all the workers in the population have a good base level of performance and also consistently exploring new hyperparameters configurations. This means that PBT can quickly exploit good hyperparameters, dedicate more training time to promising models and, crucially, mutate the hyperparameter values throughout training, leading to learning the best adaptive hyperparameter schedules.

In practice, the population is the set of Tune trials running in parallel. Trial performance is evaluated using a user-specified metric such as episode_return_mean. After a specified interval, trial performances are compared, better configurations replace worse ones, and the cycle repeats (see Display 1).